A lot of times testing are done without any load or with a limit amount of used capacity. When I do some test, I like to have the HyperFlex cluster to be at least of 50% of the capacity. How can you achieve this, because compression and de-duplication are default on.

Linux VM

First create a Linux VM on the HyperFlex cluster. In this case I used Ubuntu, thin provisioned, 2 vcpu with 4 Gb mem and 2T diskdrive.

When the installation is done, have a look if cryptsetup is installed. If not install it with:

sudo apt install cryptsetup-bin

If it is not working right away, you will have to update: sudo apt update

Now we can create a big file with:

sudo fallocate -l 500G BigFile

This command will create a file of 500G with the name BigFile. Although you will see this file with a certain amount of size, nothing happens on the HyperFlex cluster.

Now we are going to do a trick.

You can use shred of dd with /dev/urandom to fill the VM with random characters, which are difficult to compress and dedup. The advantage of this method is that it uses a lot of time. The following method is way quicker:

/dev/loopx are connections between a file which can be used as block storage. There are 7 /dev/loop devices. You can see the ones who are used with :

sudo losetup

Just pick one which is not used and type the command :

sudo losetup /dev/loop7 BigFile

Now the file BigFile will be connected with /dev/loop7

Now we are going to encrypt this blockstorage:

sudo cryptsetup luksFormat /dev/loop7

You will have to fill in YES and give a password. We need this password for the next command.

After a couple of second we can type:

sudo cryptsetup luksOpen /dev/loop7 testdev

testdev is just a name.

Now we can fill this encrypted image with zero’s.

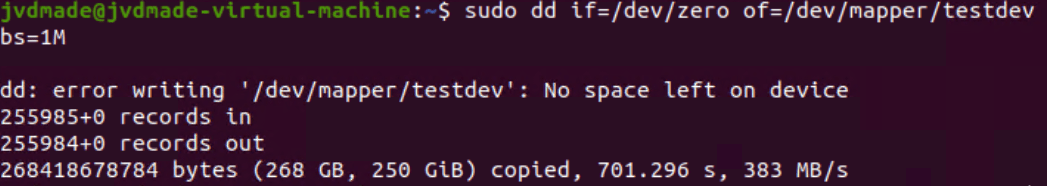

sudo dd if=/dev/zero of=/dev/mapper/testdev bs=1M

In this case the blocksize is 1Meg.

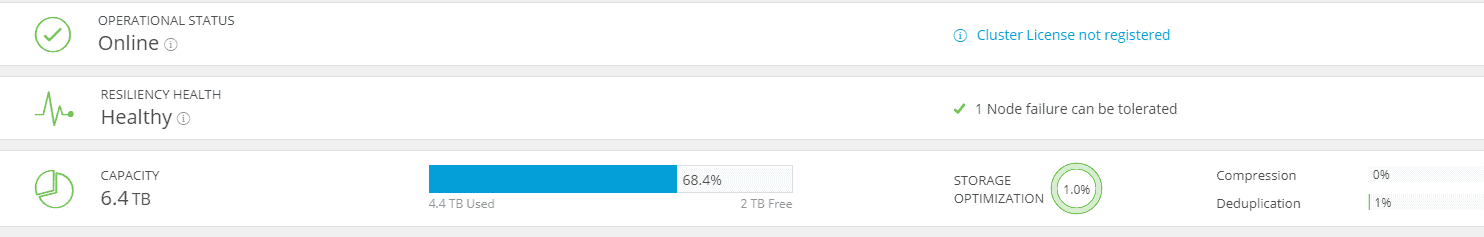

This method is way faster and it fills up your HyperFlex cluster. As you can see the Compression and Dedup are very very low.

And here you see a system almost filled to 70%

Use this method only for testing the Cluster.

Hi Joost! just want to ask if you tried to reboot a HX nodes in full mem capacity ( let’s say its memory usage is 90% used on each nodes based on vcenter) if I reboot the node, will the vms running on it will transfer to the 2 other nodes?

I didn’t test it with all kind of CPU and memory load on it.

Make always sure there is room to have the VMs running on all nodes when there is a failure.